内容

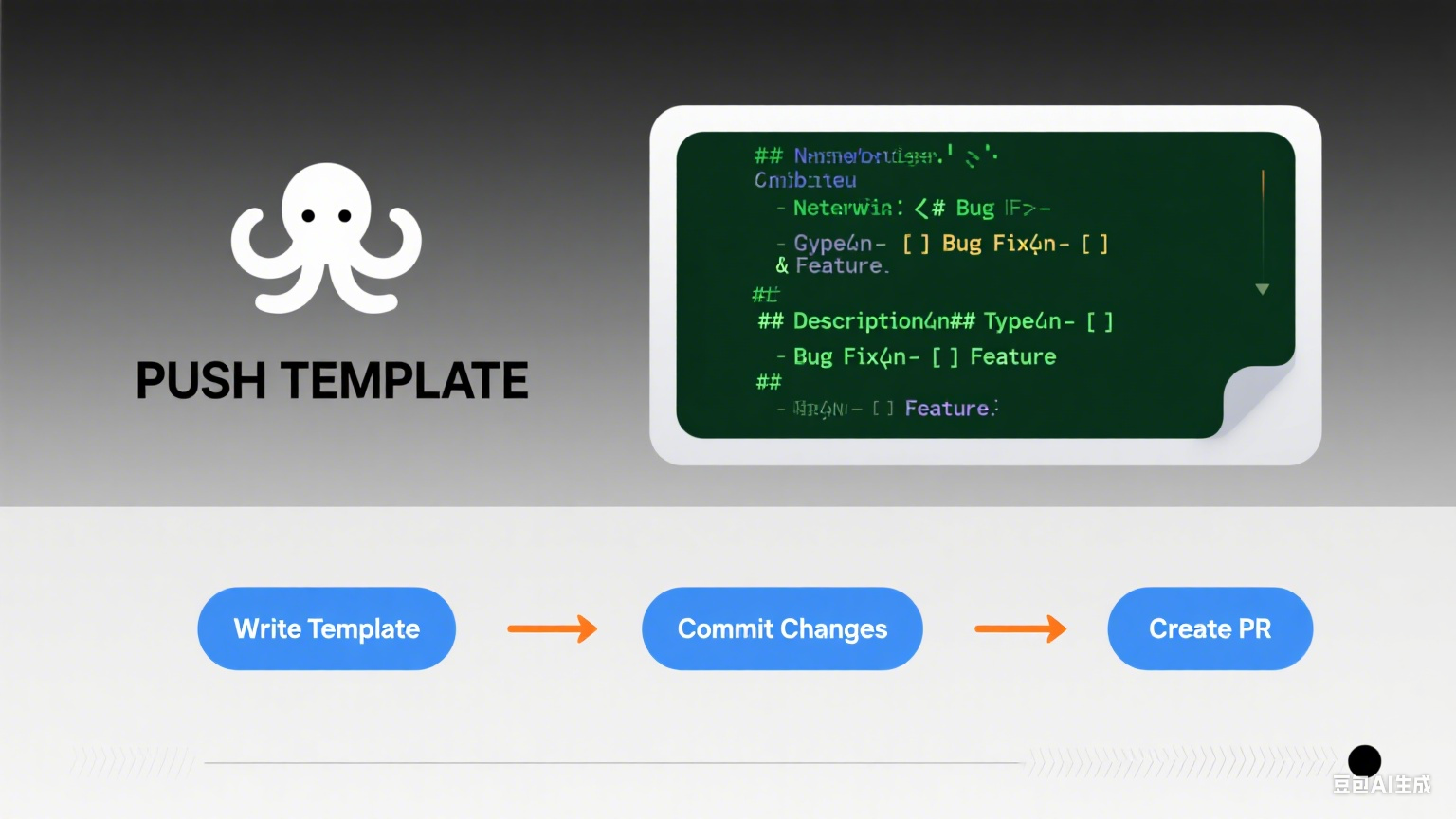

访问微博热搜榜(https://s.weibo.com/top/summary)

导入库

1 2 3 4 5 6 import requestsfrom bs4 import BeautifulSoup as BSimport smtplibfrom email.mime.multipart import MIMEMultipartfrom email.mime.text import MIMETextimport time

定义获取微博热搜的函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 def get_line (): headers = { "User-Agent" : "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36" , 'Cookie' :'4210214103(保密了)' } url = 'https://s.weibo.com/top/summary' soup=BS(requests.get(url,headers=headers).content.decode('utf-8' ),'lxml' ) tips=soup.select('#pl_top_realtimehot > table > tbody > tr > td.td-02 > a' ) hot=soup.select('#pl_top_realtimehot > table > tbody > tr > td.td-02 > span' ) x=soup.select('#pl_top_realtimehot > table > tbody > tr > td.td-02 > a' ) line='\n' for i in range (1 ,51 ): line+=f"Top{i} :" +tips[i].text+" 热度:" +hot[i].text+"\n地址:https://s.weibo.com" +x[i].get('href' )+'\n\n' return line

get_line函数用于爬取微博热搜榜的数据。

headers:设置HTTP请求头,包括User-Agent和Cookie。

url:微博热搜榜的URL。

soup:使用BeautifulSoup解析请求返回的HTML内容。

tips、hot、x:使用CSS选择器分别提取热搜关键词、热度和链接。

line:初始化一个字符串,用于存储格式化后的热搜信息。

for循环:遍历热搜榜的前50条数据,将每条热搜的信息格式化后添加到line字符串中。

return line:返回包含所有热搜信息的字符串。

定义发送邮件的函数

1 2 3 4 5 6 7 8 9 10 11 12 def send_email (line ): sender_email = "941521358@qq.com" receiver_email = "ldyertop@qq.com" password = "4210214103(保密了)" message = MIMEMultipart() message['From' ] = sender_email message['To' ] = receiver_email message['Subject' ] = "Ldyer的微博热搜" message.attach(MIMEText(line, 'plain' )) with smtplib.SMTP_SSL('smtp.qq.com' , 465 ) as server: server.login(sender_email, password) server.sendmail(sender_email, receiver_email, message.as_string())

send_email函数用于发送电子邮件。

sender_email、receiver_email、password:发件人邮箱、收件人邮箱和邮箱密码。

message:创建一个MIMEMultipart对象,用于构建邮件内容。

message[‘From’]、message[‘To’]、message[‘Subject’]:设置邮件的发件人、收件人和主题。

message.attach:将文本内容添加到邮件中。

with smtplib.SMTP_SSL:使用smtplib的SMTP_SSL对象连接到QQ邮箱的SMTP服务器。

server.login:登录邮箱。

server.sendmail:发送邮件。

无限循环发送邮件

1 2 3 4 while True : send_email(get_line()) print ("邮件已发送" ) time.sleep(20 )

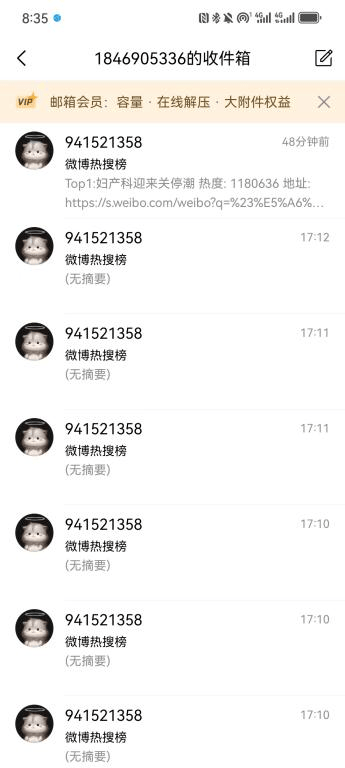

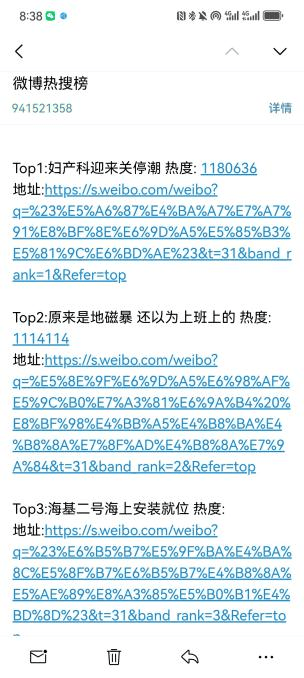

运行结果